Automating Serverless Workflows with AWS Step Functions: A Beginner's Guide

AWS Step Functions—a service that allows you to coordinate and chain multiple AWS services into serverless workflows. This blog is a beginner-friendly guide to understanding how to automate serverless workflows using AWS Step Functions, and we'll explore the concept through a simple but powerful Order Processing use case.

Need of AWS Step Functions

In serverless applications, you often need to chain several Lambda functions together to complete a task—such as processing orders, approving requests, or transforming data. Traditionally, you’d handle this orchestration in code, which can quickly become a maintenance headache.

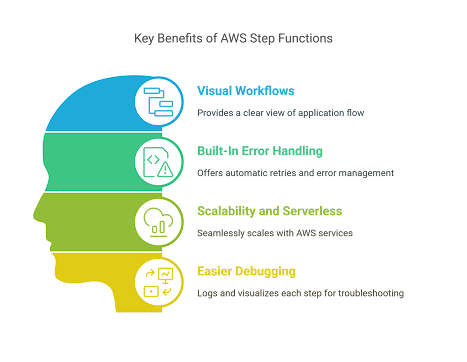

AWS Step Functions let's you:

-

Visually design workflows as state machines

-

Handle retries, timeouts, and errors gracefully

-

Easily integrate with other AWS services

-

Monitor and debug workflows using the AWS Console

Use Case: Automating Order Processing Workflow

Let’s walk through a simple order processing system using AWS Step Functions and Lambda functions. The steps include:

-

Receive Order

-

Validate Payment

-

Check Inventory

-

Dispatch Order

-

Notify Customer

Each step is implemented as an AWS Lambda function written in Node.js.

Step 1: Create the Lambda Functions

You’ll need five basic Lambda functions. Here’s a quick overview:

1. Receive Order

exports.handler = async (event) => {

console.log("Order received:", event);

return { orderId: event.orderId, status: "RECEIVED" };

};

2. Validate Payment

exports.handler = async (event) => { console.log("Validating payment for:", event.orderId); // Assume payment is valid return { ...event, paymentStatus: "VALID" }; };

3. Check Inventory

exports.handler = async (event) => {

console.log("Checking inventory for:", event.orderId);

// Assume inventory is available

return { ...event, inventoryStatus: "AVAILABLE" };

};4. Dispatch Order

exports.handler = async (event) => {

console.log("Dispatching order:", event.orderId);

return { ...event, dispatchStatus: "DISPATCHED" };

};5. Notify Customer

exports.handler = async (event) => { console.log("Notifying customer for order:", event.orderId); return { ...event, notification: "SENT" }; };

Step 2: Define the State Machine

Next, use Amazon States Language (ASL) to define your workflow. Here’s a simplified version of the definition:

{

"StartAt": "ReceiveOrder",

"States": {

"ReceiveOrder": {

"Type": "Task",

"Resource": "arn:aws:lambda:REGION:ACCOUNT_ID:function:ReceiveOrder",

"Next": "ValidatePayment"

},

"ValidatePayment": {

"Type": "Task",

"Resource": "arn:aws:lambda:REGION:ACCOUNT_ID:function:ValidatePayment",

"Next": "CheckInventory"

},

"CheckInventory": {

"Type": "Task",

"Resource": "arn:aws:lambda:REGION:ACCOUNT_ID:function:CheckInventory",

"Next": "DispatchOrder"

},

"DispatchOrder": {

"Type": "Task",

"Resource": "arn:aws:lambda:REGION:ACCOUNT_ID:function:DispatchOrder",

"Next": "NotifyCustomer"

},

"NotifyCustomer": {

"Type": "Task",

"Resource": "arn:aws:lambda:REGION:ACCOUNT_ID:function:NotifyCustomer",

"End": true

}

}

} You can paste this into the Step Functions visual editor, replacing the Lambda ARNs with your own.

Step 3: Deploy with AWS Console or Infrastructure as Code

You can create your Lambda functions and state machine manually using the AWS Console, or automate the deployment using AWS SAM or Terraform. If you’re just starting out, the console method works great for learning.

Step 4: Test the Workflow

Once deployed, you can test the state machine by passing in an input like:

{

"orderId": "ORDER123"

}Go to the Step Functions console and view the execution flow. You’ll see each step execute in sequence, with logs from each Lambda function.

Benefits of This Approach

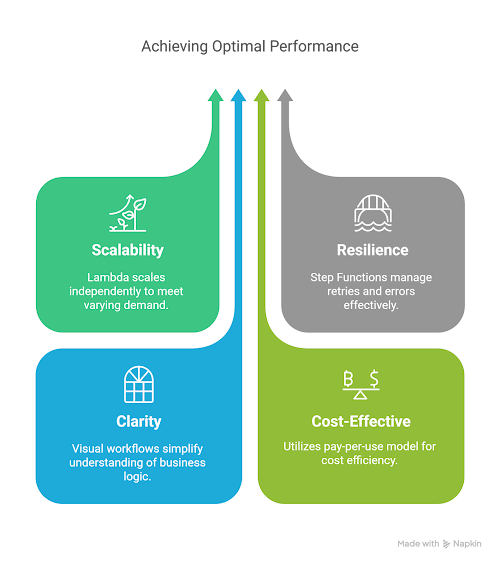

-

Scalability: Each Lambda scales independently based on demand.

-

Resilience: Step Functions handle retries and errors.

-

Clarity: Visual workflow makes understanding business logic easier.

-

Cost-Effective: Pay-per-use pricing model.

Best Practices

-

Error Handling: Add

CatchandRetryblocks in your state machine to gracefully handle failures. -

Timeouts: Define timeouts for long-running tasks.

-

Security: Use IAM roles with least privilege for Lambda functions.

-

Monitoring: Leverage CloudWatch Logs and AWS X-Ray for observability.

-

Modularity: Break down your workflow into reusable Lambda functions.

Wrapping Up

AWS Step Functions are a powerful tool for orchestrating serverless workflows. By combining them with Lambda functions, you can build scalable, maintainable, and robust applications. Our order processing use case just scratches the surface—imagine the workflows you can automate in your own projects!

Ready to automate your backend logic? Give Step Functions a spin and level up your serverless architecture game.

If you enjoyed this blog, share it with your developer friends and let me know how you’re using Step Functions in your projects. Follow for more hands-on AWS content!

#AWS #Serverless #StepFunctions #NodeJS #CloudComputing #Microservices #AWSArchitecture